-

- Downloads

add functionality to display results into graphs and improve on calculations. Fixed bugs.

Showing

- README.md 1 addition, 1 deletionREADME.md

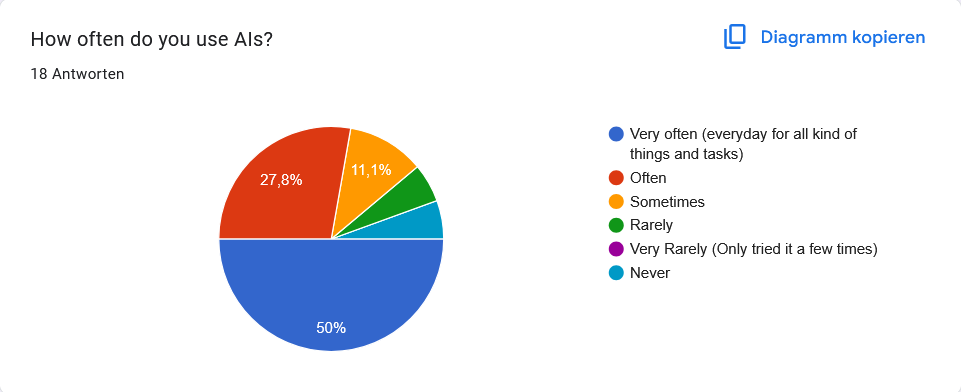

- results/ai_usage.png 0 additions, 0 deletionsresults/ai_usage.png

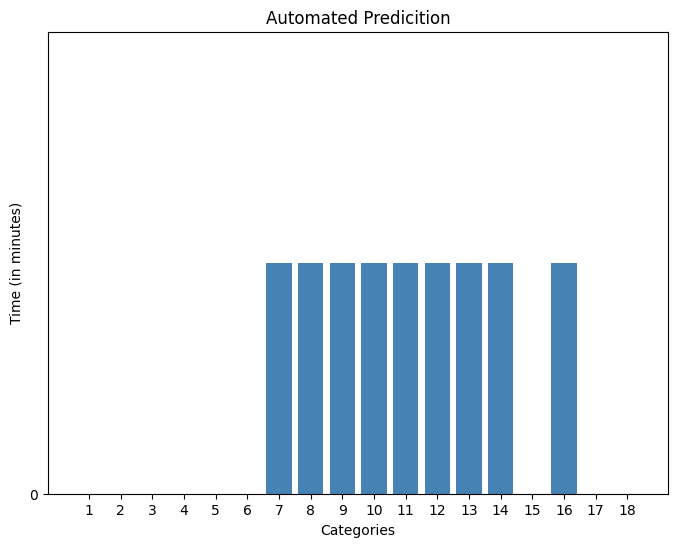

- results/automated_prediciton.png 0 additions, 0 deletionsresults/automated_prediciton.png

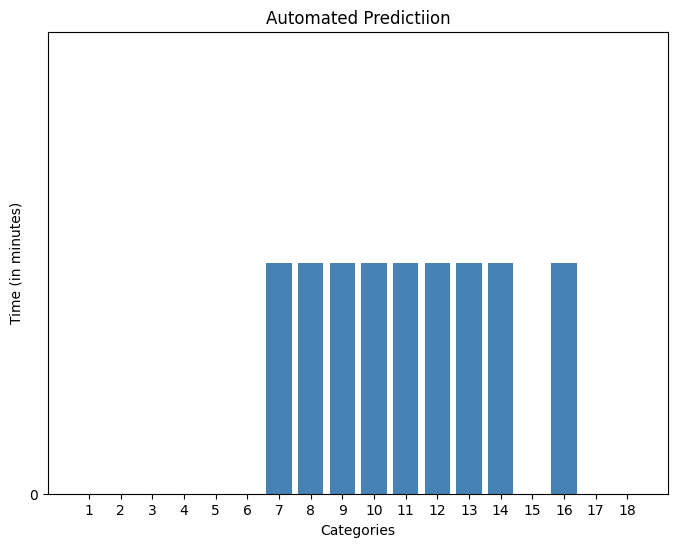

- results/automated_prediction.png 0 additions, 0 deletionsresults/automated_prediction.png

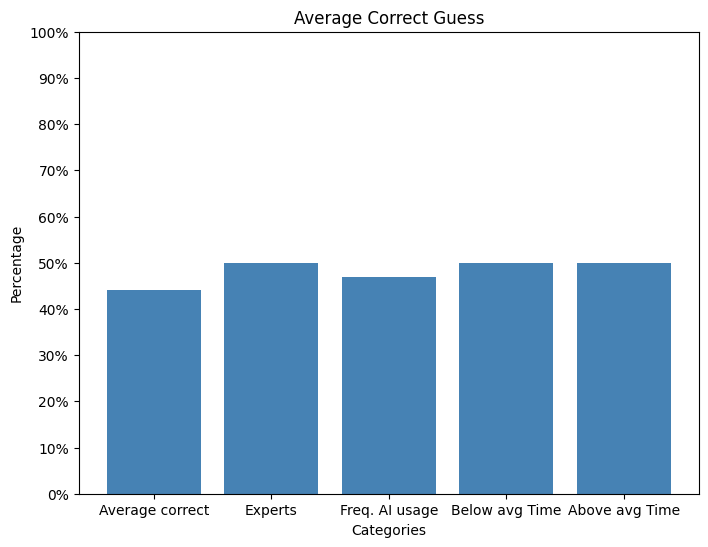

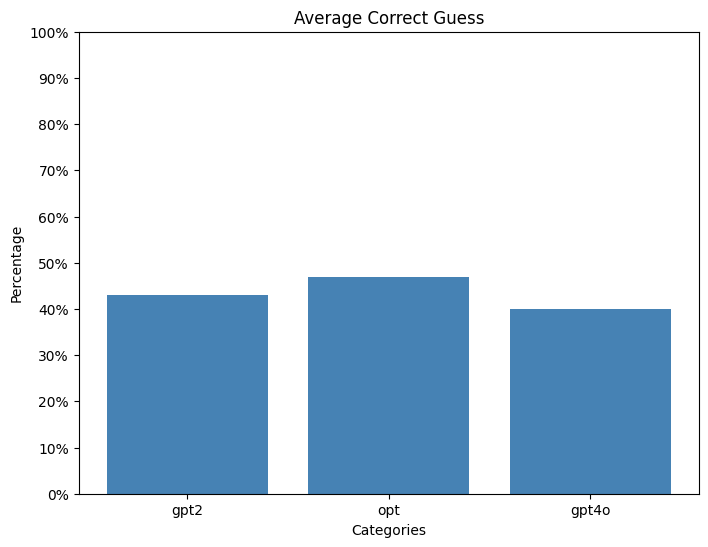

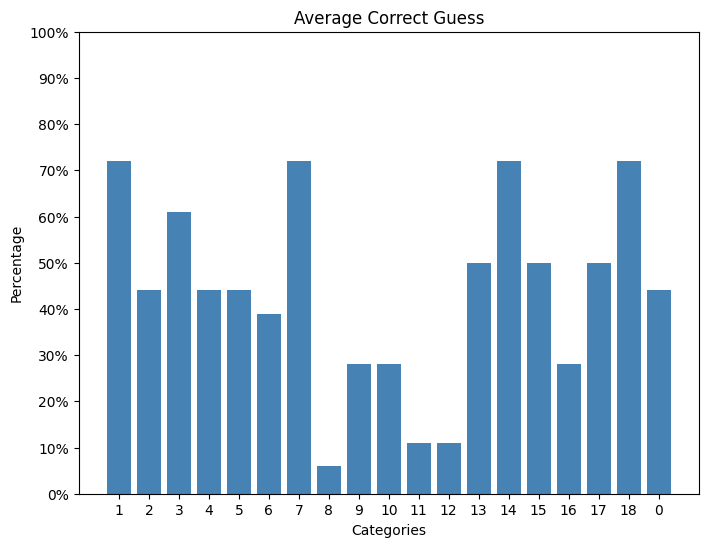

- results/correct_guess.png 0 additions, 0 deletionsresults/correct_guess.png

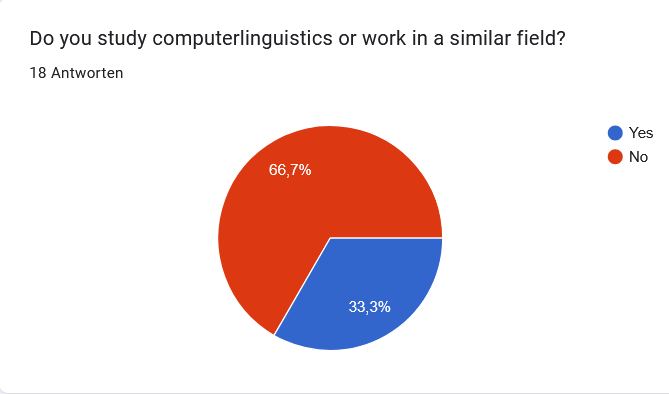

- results/expert_group.png 0 additions, 0 deletionsresults/expert_group.png

- results/model_results.png 0 additions, 0 deletionsresults/model_results.png

- results/param_matching.png 0 additions, 0 deletionsresults/param_matching.png

- results/param_scores.png 0 additions, 0 deletionsresults/param_scores.png

- results/survey_groups_correct.png 0 additions, 0 deletionsresults/survey_groups_correct.png

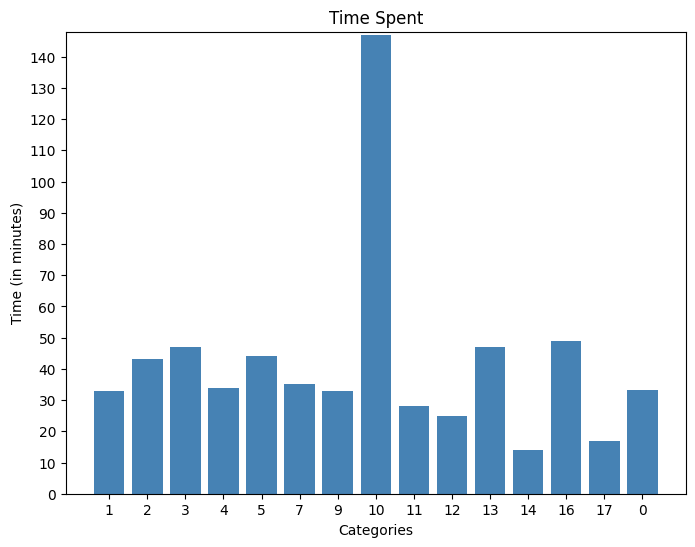

- results/time_spent.png 0 additions, 0 deletionsresults/time_spent.png

- src/README.md 4 additions, 0 deletionssrc/README.md

- src/__pycache__/asses_results.cpython-312.pyc 0 additions, 0 deletionssrc/__pycache__/asses_results.cpython-312.pyc

- src/__pycache__/automatic_prediciton.cpython-312.pyc 0 additions, 0 deletionssrc/__pycache__/automatic_prediciton.cpython-312.pyc

- src/__pycache__/compute_metrics.cpython-312.pyc 0 additions, 0 deletionssrc/__pycache__/compute_metrics.cpython-312.pyc

- src/asses_results.py 124 additions, 32 deletionssrc/asses_results.py

- src/automatic_prediciton.py 38 additions, 29 deletionssrc/automatic_prediciton.py

- src/compute_metrics.py 0 additions, 0 deletionssrc/compute_metrics.py

- src/display_results.py 188 additions, 0 deletionssrc/display_results.py

results/ai_usage.png

0 → 100644

20.1 KiB

results/automated_prediciton.png

0 → 100644

14.7 KiB

results/automated_prediction.png

0 → 100644

14.8 KiB

results/correct_guess.png

0 → 100644

27.7 KiB

results/expert_group.png

0 → 100644

12.7 KiB

results/model_results.png

0 → 100644

22.1 KiB

results/param_matching.png

0 → 100644

33.7 KiB

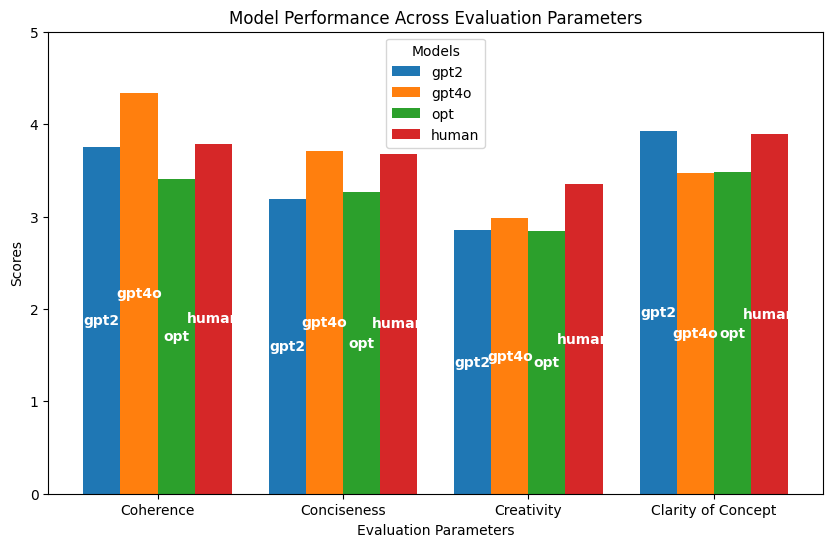

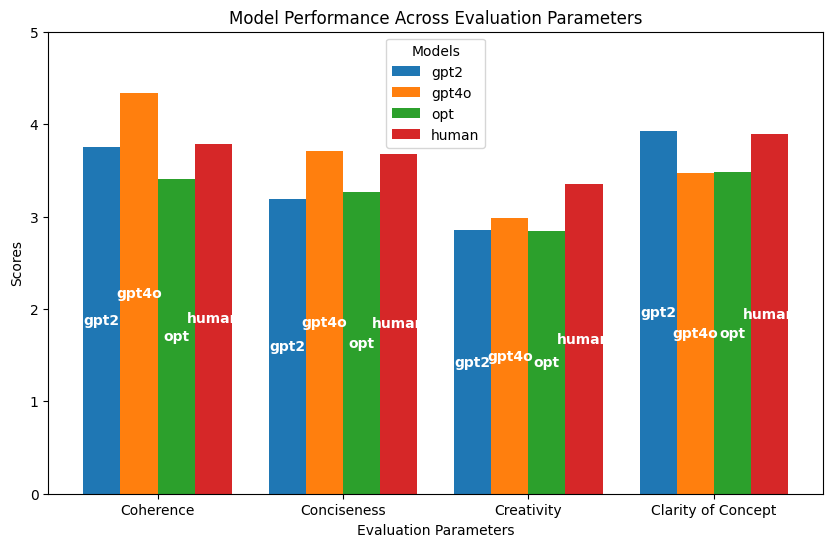

results/param_scores.png

0 → 100644

33.7 KiB

results/survey_groups_correct.png

0 → 100644

24.5 KiB

results/time_spent.png

0 → 100644

19.9 KiB

src/README.md

0 → 100644

File added

File added

File added

File moved

src/display_results.py

0 → 100644